by Rachel Thomas

The USF Center for Applied Data Ethics is home to a talented team of ethics fellows who often engage with current news events that intersect their research and expertise. Here are a few recent articles by or quoting CADE researchers:

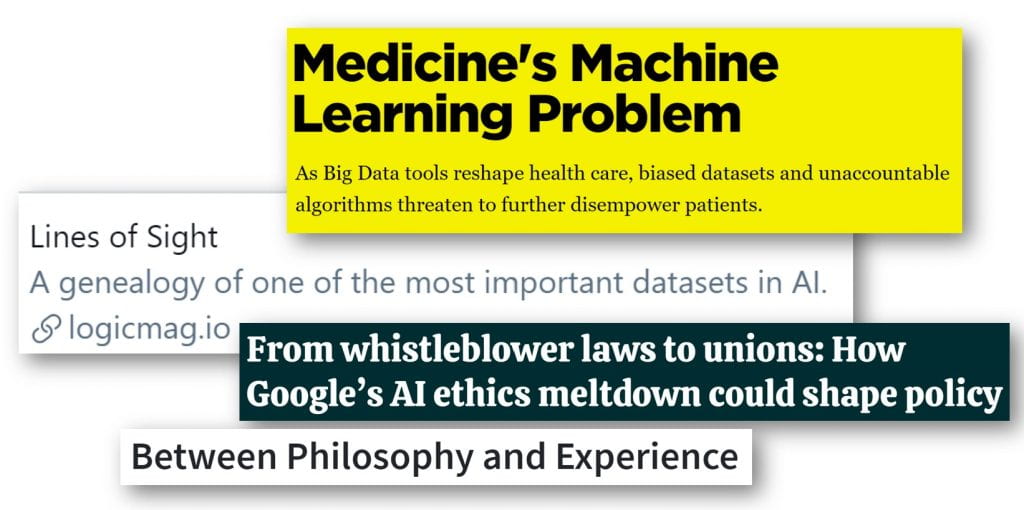

- Medicine’s Machine Learning Problem, by Rachel Thomas

- Lines of Sight: A genealogy of one of the most important datasets in AI, by Razvan Amironesei, together with Alex Hanna, Emily Denton, Andrew Smart, and Hilary Nicole

- From whistleblower laws to unions: How Google’s AI ethics meltdown could shape policy, quotes Rumman Chowdhury

- Between Philosophy and Experience, by Ali Alkhatib

Genealogy of ImageNet

CADE fellow Razvan Amironesei, together with Alex Hanna, Emily Denton, Andrew Smart, and Hilary Nicole of Google, wrote an article for Logic Magazine, inspecting the genealogy of one of the most important datasets in AI:

The assumption that lies at the root of ImageNet’s power is that benchmarks provide a reliable, objective metric of performance… As the death of Elaine Herzberg makes clear, however, benchmarks can be misleading. Moreover, they can also be encoded with certain assumptions that cause AI systems to inflict serious harms and reinforce inequalities of race, gender, and class. Failures of facial recognition have led to the wrongful arrest of Black men in at least two separate instances, facial verification checks have locked out transgender Uber drivers, and decision-making systems used in the public sector have created a “digital poorhouse” for welfare recipients.

Read the full article at logicmag.io

Medicine’s Looming Machine Learning Problem

I wrote about how biased datasets and unaccountable algorithms threaten to further disempower patients:

As a starting point, we can take five principles to heart. First, it is crucial to acknowledge that medical data—like all data—can be incomplete, incorrect, missing, and biased. Second, we must recognize how machine learning systems can contribute to the centralization of power at the expense of patients and health care providers alike. Third, machine learning designers and adopters must not take new systems onboard without considering how they will interface with a medical system that is already disempowering and often traumatic for patients. Fourth, machine learning must not dispense with domain expertise—and we must recognize that patients have their own expertise distinct from that of doctors. Finally, we need to move the conversation around bias and fairness to focus on power and participation.

Read the full article on the Boston Review.

How Google’s firing of Dr. Gebru Could Impact AI Policy

Entrepreneurial ethics fellow Rumman Chowdhury was quoted in Khari Johnson’s article From whistleblower laws to unions: How Google’s AI ethics meltdown could shape policy:

“I think just the collateral damage to literally everybody: Google, the industry of AI, of responsible AI … I don’t think they really understand what they’ve done. Otherwise, they wouldn’t have done it,” Chowdhury told VentureBeat.

Chowdhury said it’s now going to be tough for people to believe any ethics team within a Big Tech company is more than just an ethics-washing operation. She also suggested Gebru’s firing introduces a new level of fear when dealing with corporate entities: What are you building? What questions aren’t you asking?

What happened to Gebru, Chowdhury said, should also lead to higher levels of scrutiny or concern about industry interference in academic research. And she warned that Google’s decision to fire Gebru dealt a credibility hit to the broader AI ethics community.

Read the full article on VentureBeat.

Between Philosophy and Experience

CADE fellow Ali Alkhatib reflected on an epistemological rift in AI ethics and what sorts of knowledge we value:

The schism I’m trying to identify is where people draw authority from. When we talk about ethics, do we draw authority from a field and from a discipline that values distance, dispassion, notions of objectivity and platonic ideals – from maxims that can be distilled and named and that we then quiz each other on (Kantian ethics, deontology, value ethics, utilitarianism, functionalism, etc…) – or do we draw authority from fields that value people’s messy, sometimes inexplicable, sometimes inarticulable experiences and reflection on those experiences?

Read his full post here.

100 Brilliant Women in AI Ethics

Rachel Thomas was named as one of “100 Brilliant Women in AI Ethics” for 2021. See the full list here.